#015: Product Pulse AI Playbook: THINK phase

Setting the foundation for strong AI work

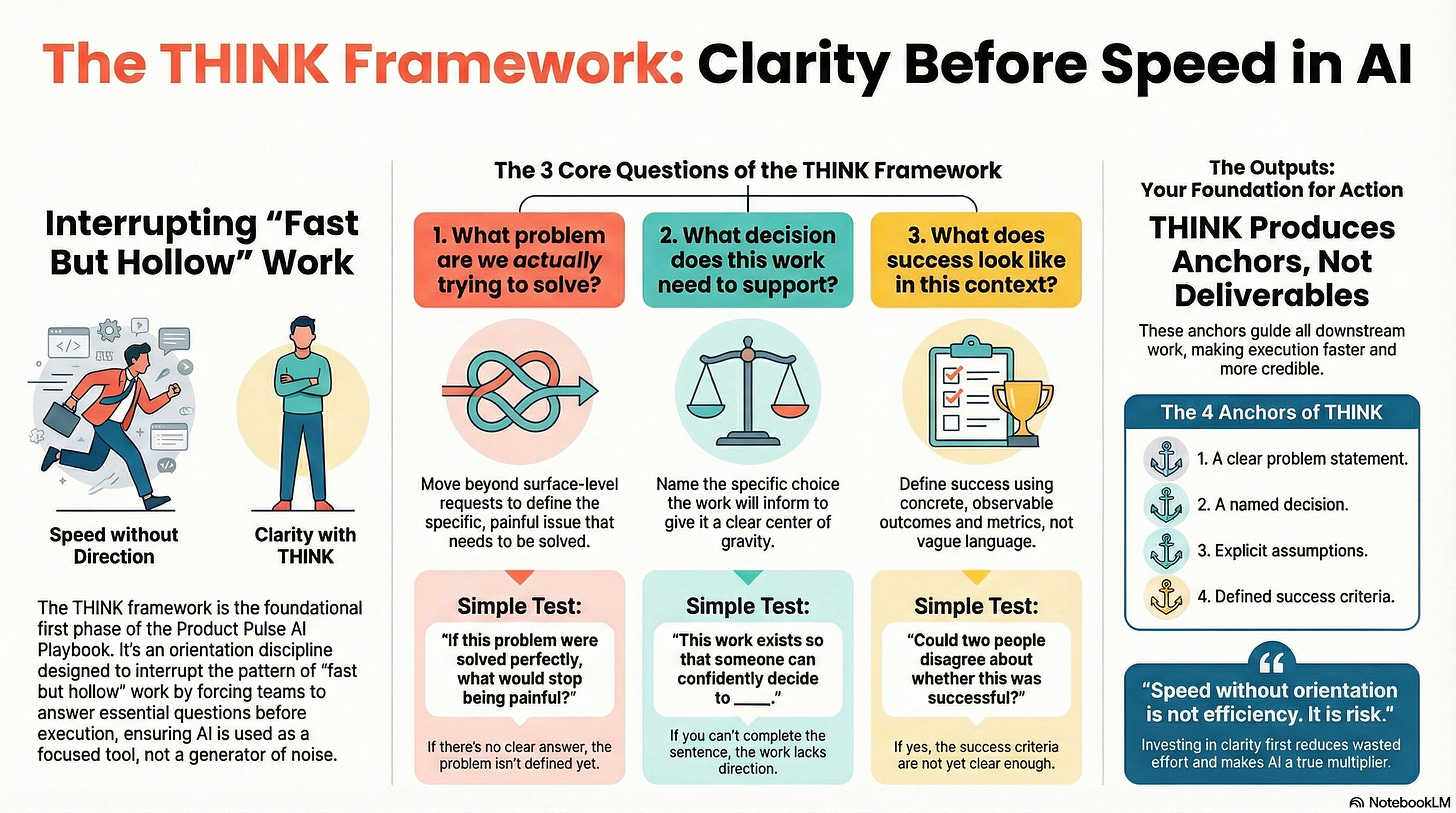

THINK: Clarity Before Speed

This is the second post in the Product Pulse Africa AI Playbook.

In the first post, I outlined the structure of the playbook and the problem it aims to solve: the growing gap between AI capabilities and meaningful work.

In this piece, we focus and dig into the first phase of the playbook: THINK.

This is the first phase of meaningful AI work.

A lot of people are using AI without any real structure, and it shows everywhere. We see endless summaries that say very little, rapid prototypes with no clear intent, and vibe coding that looks impressive but solves nothing in particular. The tools themselves are powerful, but the grounding is missing. As a result, teams end up shipping work that looks complete on the surface but feels hollow in practice.

The THINK phase in the product playbook exists to close this gap.

Why THINK Exists

Most modern teams operate under sustained pressure. They are expected to move quickly, respond continuously, and demonstrate progress even when the underlying problem is not yet well understood. AI has intensified this environment by making output easy and inexpensive to produce. As a result, speed is no longer the limiting factor. Clarity is.

When clarity is absent, teams default to activity rather than direction. Work begins before there is shared agreement on orientation. This typically means teams start building, summarizing, designing, or prompting AI systems without first establishing what actually matters.

This pattern reliably leads to three failure modes:

The wrong problem is solved efficiently, because the problem was never clearly defined.

Polished outputs fail to support real decisions, because the decision was never named.

AI begins to shape direction, because intent was not established before execution.

THINK exists to interrupt this pattern.

It forces essential questions to surface early, while they can still influence the work. These questions often feel obvious, which is why they are skipped. Each person assumes someone else has already addressed them. In practice, they remain unanswered.

More importantly, THINK establishes the foundation for everything that follows:

BUILD assumes the problem is worth solving.

PROVE assumes success has been explicitly defined.

When THINK is weak, every downstream phase becomes fragile, regardless of how capable the tools or teams may be.

THINK From First Principles

At its core, THINK is an orientation discipline. It exists to establish shared understanding before any execution begins. Rather than asking how to do something, THINK forces agreement on what matters and why it matters in this specific context.

From first principles, THINK creates clarity across three essential dimensions:

The real problem being addressed, not the task or request that surfaced it.

The decision or outcome the work must support.

The definition of success, expressed in concrete and observable terms.

Everything else in the work flows from these foundations.

When even one of these dimensions is unclear, the work becomes structurally fragile. Teams may still move forward, ship deliverables, and produce work that appears impressive. However, that work is unlikely to hold up when it encounters real decisions, scrutiny, or changing conditions.

The sections that follow examine each of these dimensions in detail and show how they shape effective AI-assisted work.

1. What Problem Are We Actually Trying to Solve?

This is the most frequently skipped question in modern work, and it is often skipped more aggressively once AI enters the process.

Teams routinely conflate different things:

Tasks with problems

Requests with underlying needs

Symptoms with root causes

A stakeholder asks for a dashboard.

A leader requests a new feature.

A manager asks for a presentation.

These requests are not problems in themselves. They are signals that something is not working as expected. Treating them as problems leads teams to act on surface-level discomfort rather than the underlying issue.

The role of THINK is to translate these signals into a clearly articulated problem before any execution begins.

A Practical Scenario

Consider a product manager who is asked to “use AI to analyze onboarding.”

At first glance, this sounds specific enough to act on. In practice, it is still ambiguous. If this request is taken directly to an AI tool, the likely outcome is predictable:

A lengthy summary of user feedback

A generic list of common onboarding issues

Confident language without a clear point of action

The output may be accurate, but it lacks direction because the problem was never defined.

THINK intervenes at this moment, before the tool is opened.

A Simple Test

A real problem statement should answer one question clearly:

If this problem were solved perfectly, what would stop being painful?

If there is no clear answer, the work is not yet grounded in a problem.

Example

Weak framing:

Improve onboarding experience

This framing is broad and subjective. It leads to vague AI prompts and output that is difficult to evaluate or act on.

Stronger framing:

New users drop off before completing account setup, preventing activation and early usage

This version identifies a specific failure, a clear user group, and a concrete outcome. AI can now be used purposefully, because it has a defined problem to work against.

THINK Template: Problem Framing

Before any execution or AI use, answer the following in plain language:

Who is experiencing the problem?

What behavior or outcome is currently broken?

What evidence suggests this is a real issue?

What happens if this problem remains unsolved?

Only once these questions are answered does it make sense to involve AI.

Otherwise, AI will still produce output, but that output will be untethered from intent. That is the core risk THINK is designed to prevent.

2. What Decision or Outcome Does This Work Need to Support?

Once the problem is clear, the next question is not what should be built, but what decision the work is meant to support.

Most work exists to inform a choice, even when that choice is never stated explicitly. Teams are usually trying to determine whether to invest, delay, change direction, or seek approval. When this decision remains implicit, the work loses its center of gravity.

In these situations, AI output quickly turns into noise. Teams can generate slides, summaries, and analyses indefinitely without moving anyone closer to action. The work looks productive, but nothing resolves.

THINK addresses this by requiring the decision to be named before any execution begins.

A Practical Scenario

Returning to the onboarding example, consider the prompt:

“Analyze this onboarding data and tell me what is wrong.”

The response may be accurate and well-structured, but it remains unhelpful if no decision has been defined. Without a decision in view, the output cannot be evaluated for relevance or usefulness.

THINK reframes the task before AI is involved, shifting the focus from analysis for its own sake to analysis in service of a choice.

A Simple Test

A useful way to check whether the decision is clear is to complete the following sentence:

This work exists so that someone can confidently decide to __.

If this sentence cannot be completed, the work lacks direction.

Example

Unclear framing:

Create a research summary on customer feedback

Clear framing:

Create a research summary so leadership can decide whether to prioritize onboarding fixes in Q2

In the second case, AI has a clear role. It is not asked to summarize everything, but to surface information that supports a specific decision.

THINK Template: Decision Framing

Before building anything or engaging AI, answer the following:

Who will use this work?

What decision are they trying to make?

What are the consequences of delaying or getting this decision wrong?

What information would materially improve the quality of the decision?

Answering these questions transforms AI from a content generator into a decision-support tool.

3. What Would Success Look Like in This Specific Context?

Even when the problem and decision are clear, AI can still produce misleading or low‑value output if success is not defined precisely.

This is where teams often default to vague language. Statements such as “better engagement,” “stronger alignment,” or “more clarity” express intent, but they do not provide a standard for evaluation. They make it difficult to judge whether the work has actually done its job.

THINK requires success to be defined in concrete and, where relevant, measurable terms.

A Practical Scenario

Consider the prompt:

“Improve onboarding insights.”

Even if the problem and decision are well framed, this instruction is incomplete. Without a clear definition of success, there is no way to tell whether the output is sufficient. Evaluation becomes subjective, driven by how persuasive the analysis sounds rather than what it enables.

A Simple Test

A useful test for success criteria is the following:

Could two reasonable people disagree about whether this work was successful after reviewing the same output?

If the answer is yes, success has not been defined clearly enough.

Example

Weak success definition: Stakeholders feel informed

This provides no basis for evaluation.

Stronger success definition: After reviewing this brief, leadership agrees on one prioritized onboarding change, confirms it for inclusion in the next quarter’s roadmap, and reduces follow‑up questions in subsequent reviews.

Where the work is tied to product or business outcomes, success criteria should also include metrics that reflect the intended impact.

For example:

Onboarding drop‑off decreases from X% to Y% within a defined period

Activation rate increases by Z% following the implemented change

A go or no‑go decision is reached within a specified timeframe

Metrics do not need to be exhaustive, but they must be relevant to the decision being supported.

THINK Template: Success Criteria

Before executing or engaging AI, define success using observable outcomes and metrics:

What should be true once this work exists?

What decision or action should immediately follow?

Which metric or indicator confirms that the outcome has been achieved?

What would failure look like in concrete terms?

Defining success this way makes evaluation possible. It also gives AI a clear boundary: once these criteria are met, the work is complete.

Problem First, Then AI

When the problem is clearly framed, AI is no longer asked to “figure things out.” It is asked to work within boundaries.

Instead of prompting AI with:

Summarize user feedback

You prompt with:

Analyze this feedback to identify why new users fail to complete onboarding and where drop-off is most severe

The difference is not sophistication. It is orientation.

manufacturing noise.

THINK as the Foundation for AI Work

THINK is not about exhaustive analysis.

It is about choosing the right constraints before speed takes over.

Good constraints reduce effort.

Bad or missing constraints multiply it.

When THINK is done properly:

Execution becomes faster, not slower

AI becomes a multiplier, not a distraction

Outputs are easier to evaluate and defend

Most importantly, the work earns credibility.

People trust it because it is anchored.

The True Outputs of THINK

THINK does not produce polished deliverables.

It produces anchors:

A clear problem statement

Explicit assumptions

A named decision

Defined success criteria

These anchors guide everything that follows.

Without them, BUILD becomes activity.

A Simple THINK Checklist

Before moving into execution, pause and ask:

Can I clearly state the problem in one sentence?

Do I know what decision/output this work supports?

Can I describe success without vague language?

Have I chosen constraints instead of avoiding them?

If any answer is no, slow down.

Speed without orientation is not efficiency.

It is risk.

What Comes Next

THINK is a discipline, not a one-time step.

It establishes orientation, defines constraints, and makes success explicit. Once that foundation is in place, the work can move forward with purpose rather than momentum alone.

This is where the next phase of the Product Pulse Africa AI Playbook begins: BUILD.

BUILD is not about experimentation for its own sake. It is the phase where AI is applied deliberately, within the boundaries set by THINK, to produce work that is executable, evaluable, and tied to a real decision.

Check out the video below to get a better summary of the framework: